ACI Multisite Connectivity Design and Deployment use cases

I- Intra-EPG Connectivity Across Sites

For intra-EPG connectivity across sites, it is required to define objects in the Template-Stretched:

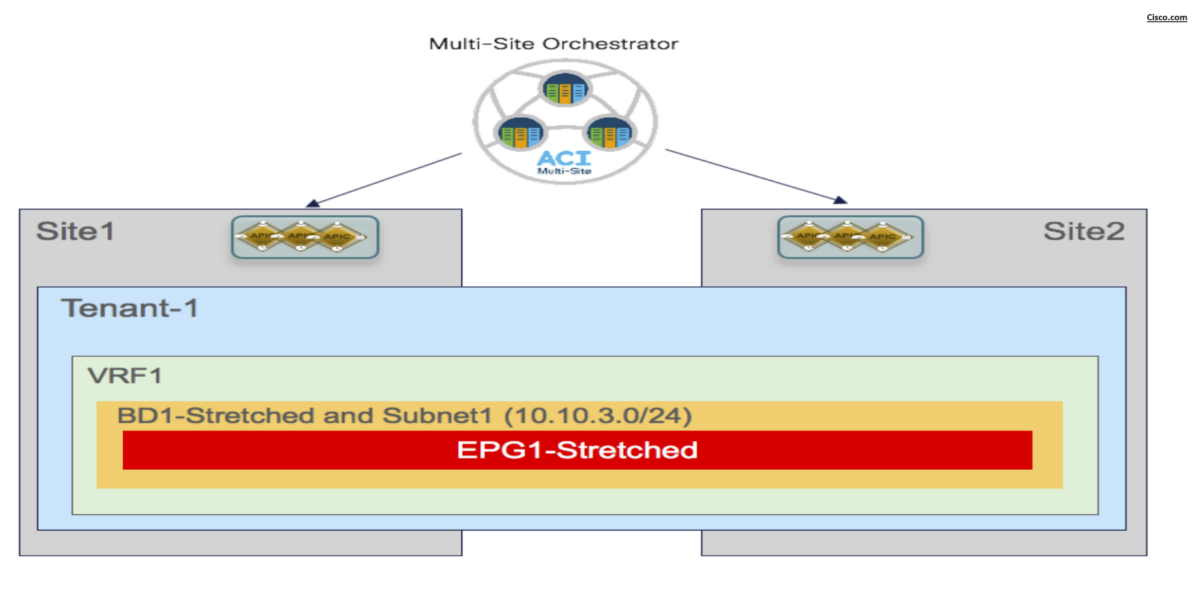

Scenario-1: Stretched EPG, Stretched BD and Stretched Subnet

The EPG is mapped to a stretched BD and the IP subnet(s) associated to the BD is also stretched across sites. This implies that intra-subnet communication can in this case be enabled between endpoints connected to different sites.

This deployment is suited for L2 stretching across sites:

Notes for BD stretching

– The BD must be associated with the stretched VRF1 previously defined.

– The BD is stretched by setting the “L2 Stretch” knob. In most of the use cases, the recommendation is

to keep the “Intersite BUM Traffic Allow” knob disabled

– Since the BD is stretched, the BD subnet is also defined at the template level since it must also be

extended across sites

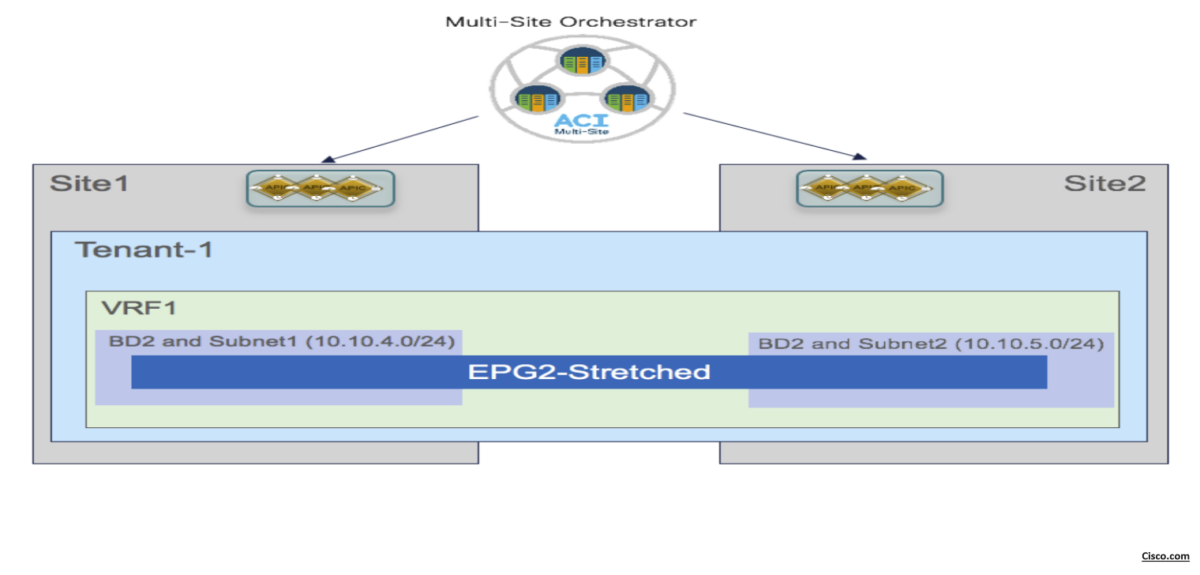

Scenario-2: Stretching the EPG without Stretching BD and Subnet

the EPG is still stretched across site, but the BD and the subnet(s) are not stretched, which essentially implies that intra-EPG communication between endpoint connected in separate sites will be Layer 3 and not Layer 2.

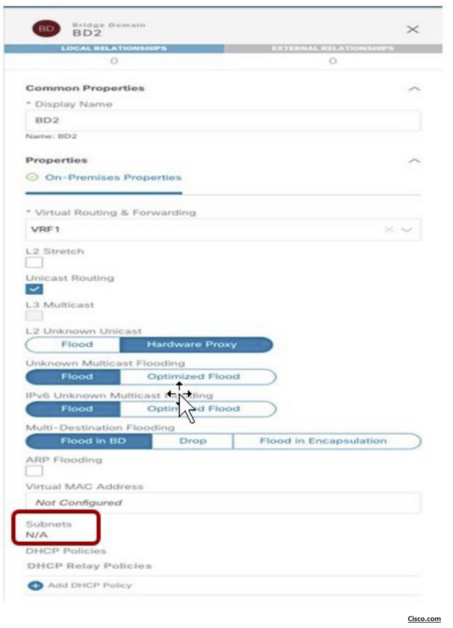

Since an EPG can only be associated with a single BD, we need to ensure that the same BD object is created in both sites, even if the forwarding behavior of the BD is to be non-stretched. This can be achieved by deploying the BD inside the Stretched-Template and configure it as below:

- The BD is associated with the same stretched VRF1 previously defined.

- The BD must be configured with the “L2 Stretch” knob disabled, as we don’t want to extend the BD

subnet nor allow L2 communication across sites. - The BD Subnet is configured at site level configuration not template level (because BD isn’t stretched in this scenario).

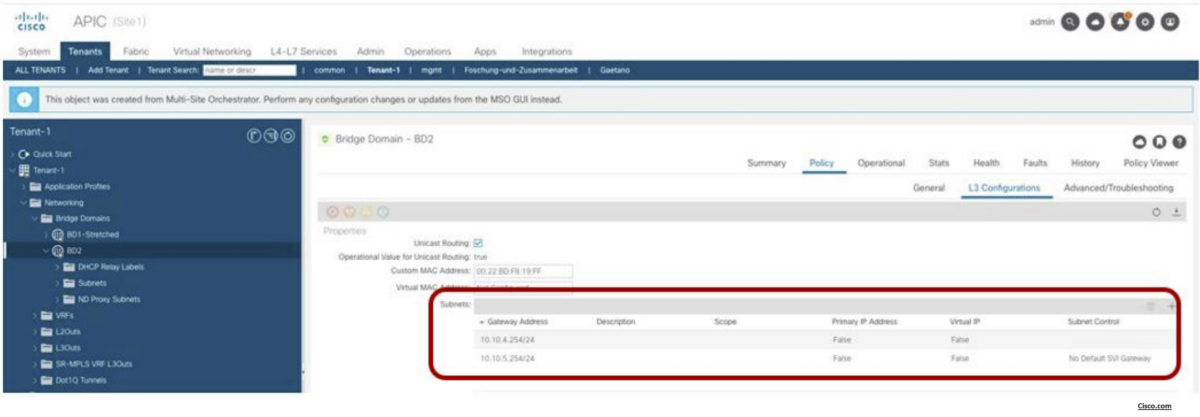

BD Shadow Subnet

If you check the configurations on APIC (site level), the BD in Site1 is configured with both IP subnets, but only the specific one that was configured at the Site1 level on Nexus Dashboard Orchestrator (10.10.4.0/24) is going to be used to provide default gateway services for the endpoints. The other IP subnet (10.10.5.0/24) (also referred to as “Shadow Subnet”) is automatically provisioned with the “No Default SVI Gateway” parameter since it is only installed on the leaf nodes in Site1 to allow routing to happen across the sites when endpoints part of the same EPG want to communicate.

The “Shadow Subnet” is always provisioned with the “Private to VRF” scope, independently from the specific settings the same subnet had in the original site. This means that it won’t ever be possible to advertise the “Shadow Subnet” prefix out of an L3Out in the site where it is instantiated.

For advertising a BD subnet out of the L3Outs of different sites it is required to deploy the BD with the “L2 Stretch” flag set

Verify Intra-EPG communication

When looking at the routing table of the leaf nodes where the endpoints are connected, it is possible to notice how the IP subnet for the local endpoint is locally instantiated (with the corresponding anycast gateway address) and also the IP subnet for the endpoints in the remote site is locally instantiated pointing to the proxy-VTEP address of the local spines as next-hop (10.1.112.66): pervasive route.

Leaf103-Site1# show ip route vrf Tenant-1:VRF1

IP Route Table for VRF "Tenant-1:VRF1"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

10.10.4.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.1.112.66%overlay-1, [1/0], 00:09:58, static, tag 4294967294

10.10.4.254/32, ubest/mbest: 1/0, attached, pervasive

*via 10.10.4.254, vlan10, [0/0], 00:09:58, local, local

10.10.5.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.1.112.66%overlay-1, [1/0], 00:09:58, static, tag 4294967294Code language: PHP (php)The subnet entry in the routing table is used to forward routed traffic across sites until the leaf nodes can learn the specific IP address of the remote endpoints via data plane learning.

In Site1, once the data plane learning of the remote endpoint in Site2 has happened (similar output would be obtained for the leaf node in Site2). The next-hop of the VXLAN tunnel to reach the remote endpoint is represented by the O-UTEP address of the remote fabric.

VNID translation

The outputs below show the entries on the spines of Site1 and a spine of Site 2 that allow translating the Segment ID and Scope of the BD and VRF that are stretched across sites. You can notice how translation mappings are created for VRF1 and BD1-Stretched since those are stretched objects, but not for BD2 that is not “L2 stretched” instead.

Spine 1101 Site1:

Spine1101-Site1# show dcimgr repo vnid-maps

--------------------------------------------------------------

Remote | Local

site Vrf Bd | Vrf Bd Rel-state

--------------------------------------------------------------

2 2359299 | 3112963 [formed]

2 2359299 16252857 | 3112963 16154555 [formed]Code language: PHP (php)Spine 401 Site1:

Spine401-Site2# show dcimgr repo vnid-maps

--------------------------------------------------------------

Remote | Local

site Vrf Bd | Vrf Bd Rel-state

--------------------------------------------------------------

1 3112963 | 2359299 [formed]

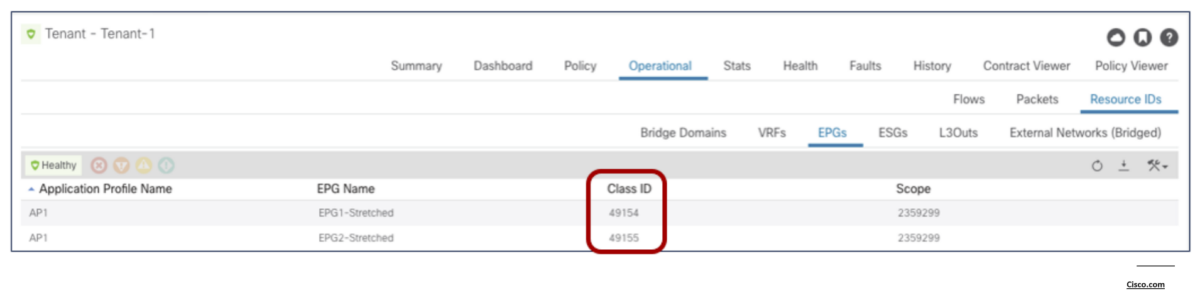

1 3112963 16154555 | 2359299 16252857 [formed] Code language: PHP (php)Sclass translation:

site1:

Site2:

Spine1101-Site1# show dcimgr repo sclass-maps

----------------------------------------------------------

Remote | Local

site Vrf PcTag | Vrf PcTag Rel-state

----------------------------------------------------------

2 2359299 49155 | 3112963 49154 [formed]

2 2359299 49154 | 3112963 16387 [formed]Code language: PHP (php)Spine401-Site2# show dcimgr repo sclass-maps

----------------------------------------------------------

Remote | Local

site Vrf PcTag | Vrf PcTag Rel-state

----------------------------------------------------------

1 3112963 49154 | 2359299 49155 [formed]

1 3112963 16387 | 2359299 49154 [formed]Code language: PHP (php)Policy Enforcement Preference

If the VRF on APIC is configured as “Unenforced”, the user can then have the capability to modify the settings to “Enforced” directly on NDO or keeping it “Unenforced” with the specific understanding that such configuration would not allow establishing intersite communication.

There are other supported functionalities (i.e. use of Preferred Groups or vzAny) allowing to remove the policy enforcement for inter-EPG communication.

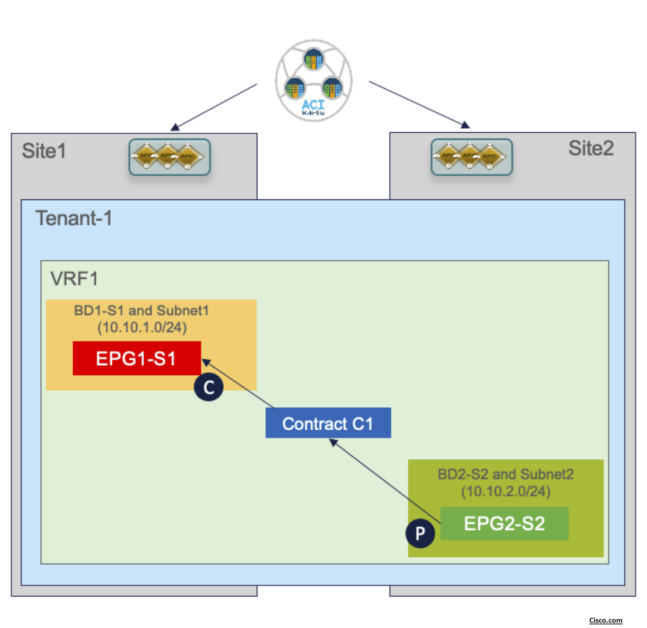

II- Inter-EPG Connectivity Across Sites

Once the local EPG/BD objects are created in each fabric, to establish communication between them it

is required to apply a security policy (contract) allowing all traffic or specific protocols. The contract and

the associated filter(s) can be defined in the Template-Stretched, so as to make it available to both fabrics

Once the contract is applied, intersite connectivity between endpoints part of the different EPGs can

be established.

On the routing table of the leaf node, as a result of the contract, it is also installed the IP subnet associated

with the remote EPG pointing to the proxy-TEP address provisioned on the local spine nodes.

Once connectivity between the endpoints is established, the remote endpoint is learned as reachable via the VXLAN tunnel. As expected, the destination of such a tunnel is the O-UTEP address for remote Site.

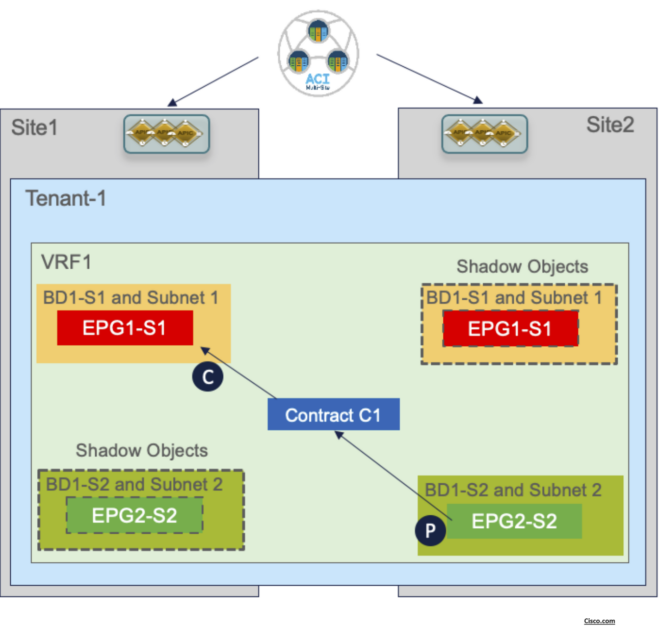

Namespace Translation

In the use case of inter-EPG connectivity between EPGs/BDs that are locally deployed in each fabric, the

creation of a security policy between them leads to the creation of the so-called ‘shadow EPG’

The creation of shadow objects is required to be able to assign them the specific resources (Segment IDs,

class IDs, etc.) that must be configured in the translation tables of the spines to allow for successful

intersite data plane communication.

Those values are then programmed in the translation tables of the spines to ensure they can perform the

proper translation functions when traffic is exchanged between endpoints in Site1 part of EPG1-S1 and

endpoints in Site2 part of EPG2-S2.

In addition to the programming of the translation entries on the spine, the assignment of class IDs to the

shadow EPGs is also important to be able to properly apply the security policy associated with the contract.

For what concerns the Segment IDs, the only translation entry that is required is the one for the VRF. This is

because when routing between sites, the VRF L3 VNID value is inserted in the VXLAN header to ensure that

the receiving site can then perform the Layer 3 lookup in the right routing domain. There is no need of installing translation entries for the Segment IDs associated with the BDs since there will never be

intersite traffic carrying those values in the VXLAN header (given that those BDs are not stretched).

![Nutanix Basics [Explained]](https://learnduty.com/wp-content/uploads/2021/07/nutanix-platform-circa-2014-800x450.png?v=1647900709)

![Single Area OSPF Configuration Example [Packet Tracer]](https://learnduty.com/wp-content/uploads/2021/07/word-image-124-800x111.png?v=1647900715)