IP Multicast PIM Dense Mode Configuration

Contents

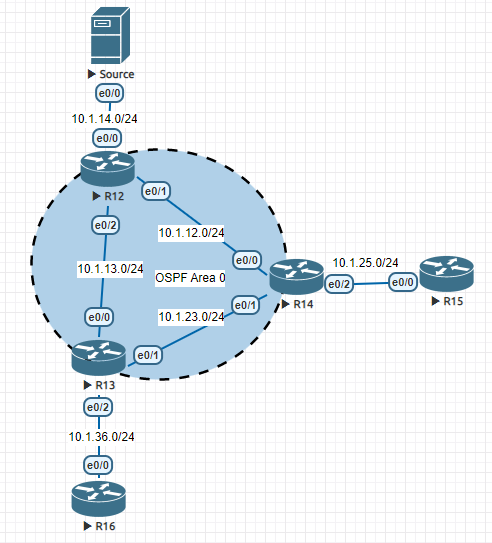

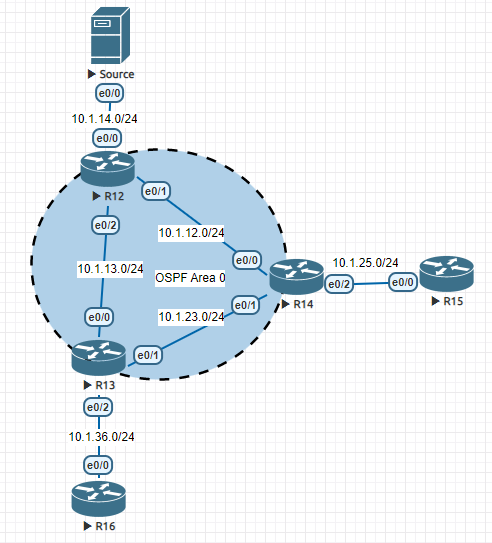

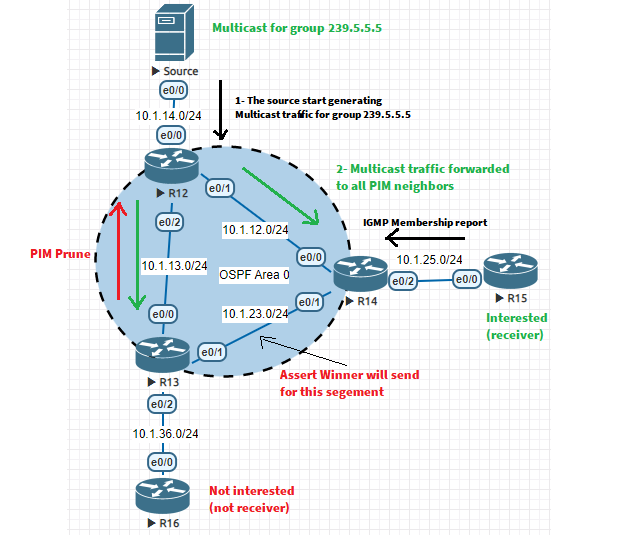

Topology

Note: this Lab is based on some PNETLab resources.

Initial Configuration

Before we start the PIM-DM configuration, let’s make the initial configuration of the interface and IGP protocol (in our case OSPF):

R12:

---

interface Ethernet0/0

no shutdown

ip address 10.1.14.1 255.255.255.0

ip ospf 1 area 0

interface Ethernet0/1

no shutdown

ip address 10.1.12.1 255.255.255.0

ip ospf 1 area 0

!

interface Ethernet0/2

no shutdown

ip address 10.1.13.1 255.255.255.0

ip ospf 1 area 0

R14:

---

interface Ethernet0/0

no shutdown

ip address 10.1.12.2 255.255.255.0

ip ospf 1 area 0

interface Ethernet0/1

no shutdown

ip address 10.1.23.2 255.255.255.0

ip ospf 1 area 0

!

interface Ethernet0/2

no shutdown

ip address 10.1.25.2 255.255.255.0

ip ospf 1 area 0

R13:

---

interface Ethernet0/0

no shutdown

ip address 10.1.13.3 255.255.255.0

ip ospf 1 area 0

!

interface Ethernet0/1

no shutdown

ip address 10.1.23.3 255.255.255.0

ip ospf 1 area 0

!

interface Ethernet0/2

no shutdown

ip address 10.1.36.3 255.255.255.0

ip ospf 1 area 0

IP PIM DM Configuration

In order to enable PIM DM, we first enable multicast-routing on the routers and then enable PIM dense-mode on the interfaces in order to form PIM DM neighborship and receive IGMP membership joins.

On R12:

R12#conf t

Enter configuration commands, one per line. End with CNTL/Z.

R12(config)#ip multicast-routing

R12(config)#int e0/0

R12(config-if)#ip pim dense-mode

R12(config)#int e0/1

R12(config-if)#ip pim dense-mode

*Sep 3 13:03:28.472: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 10.1.12.1 on interface Ethernet0/1

R12(config-if)#

*Sep 3 13:04:01.576: %PIM-5-NBRCHG: neighbor 10.1.12.2 UP on interface Ethernet0/1

*Sep 3 13:04:01.580: %PIM-5-DRCHG: DR change from neighbor 10.1.12.1 to 10.1.12.2 on interface Ethernet0/1

R12(config-if)#int e0/2

R12(config-if)#ip pim dense-mode

*Sep 3 13:05:25.328: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 10.1.13.1 on interface Ethernet0/2

*Sep 3 13:05:47.082: %PIM-5-NBRCHG: neighbor 10.1.13.3 UP on interface Ethernet0/2

*Sep 3 13:05:47.087: %PIM-5-DRCHG: DR change from neighbor 10.1.13.1 to 10.1.13.3 on interface Ethernet0/2

R12#show ip pim neighbor

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

10.1.12.2 Ethernet0/1 00:02:12/00:01:30 v2 1 / DR S P G

10.1.13.3 Ethernet0/2 00:00:26/00:01:18 v2 1 / DR S P G

On R13:

R13#conf t

Enter configuration commands, one per line. End with CNTL/Z.

R13(config)#ip multicast-routing

R13(config)#int e0/0

R13(config-if)#ip pim dense-mode

*Sep 3 13:05:47.083: %PIM-5-NBRCHG: neighbor 10.1.13.1 UP on interface Ethernet0/0

*Sep 3 13:05:49.072: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 10.1.13.3 on interface Ethernet0/0

R13(config-if)#int e0/1

R13(config-if)#ip pim dense-mode

R13(config-if)#int e0/2

R13(config-if)#ip pim dense-mode

R13(config-if)#end

*Sep 3 13:05:52.423: %PIM-5-NBRCHG: neighbor 10.1.23.2 UP on interface Ethernet0/1

*Sep 3 13:05:54.012: %SYS-5-CONFIG_I: Configured from console by console

*Sep 3 13:05:54.074: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 10.1.23.3 on interface Ethernet0/1

R13#show ip pim neighbor

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

10.1.13.1 Ethernet0/0 00:00:14/00:01:30 v2 1 / S P G

10.1.23.2 Ethernet0/1 00:00:09/00:01:35 v2 1 / S P GOn R14:

R14#conf t

Enter configuration commands, one per line. End with CNTL/Z.

R14(config)#int e0/0

R14(config-if)#ip pim dense-mode

*Sep 3 13:04:01.576: %PIM-5-NBRCHG: neighbor 10.1.12.1 UP on interface Ethernet0/0

R14(config-if)#

*Sep 3 13:04:03.568: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 10.1.12.2 on interface Ethernet0/0

R14(config-if)#int e0/1

R14(config-if)#ip pim dense-mode

R14(config-if)#int e0/2

R14(config-if)#ip pim dense-mode

*Sep 3 13:05:08.568: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 10.1.23.2 on interface Ethernet0/1

*Sep 3 13:05:52.422: %PIM-5-NBRCHG: neighbor 10.1.23.3 UP on interface Ethernet0/1

*Sep 3 13:05:52.426: %PIM-5-DRCHG: DR change from neighbor 10.1.23.2 to 10.1.23.3 on interface Ethernet0/1

*Sep 3 13:06:19.636: %SYS-5-CONFIG_I: Configured from console by console

R14#show ip pim neighbor

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

10.1.12.1 Ethernet0/0 00:02:23/00:01:19 v2 1 / S P G

10.1.23.3 Ethernet0/1 00:00:32/00:01:42 v2 1 / DR S P G

Note:

- On the routers, the interfaces facing the host are configured with “ip pim dense-mode” in order to be able to send IGMP Membership queries toward the hosts.

- When we verify ip mroute table on the routers, we find (*, 224.0.1.40):

R12#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:31:14/00:02:51, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/2, Forward/Dense, 00:28:53/stopped

Ethernet0/1, Forward/Dense, 00:31:14/stopped

PIM sparse mode uses an RP (Rendezvous Point) and Cisco routers support a protocol called “Auto

RP Discovery” to automatically find the RP in the network. Auto RP uses this multicast group

address, 224.0.1.40.

PIM dense mode doesn’t use RPs so there is no reason at all for our routers to listen to the

224.0.1.40 group address. but, despite this by default, auto RP is enabled one PIM is enabled.

Generate Multicast traffic and Verification

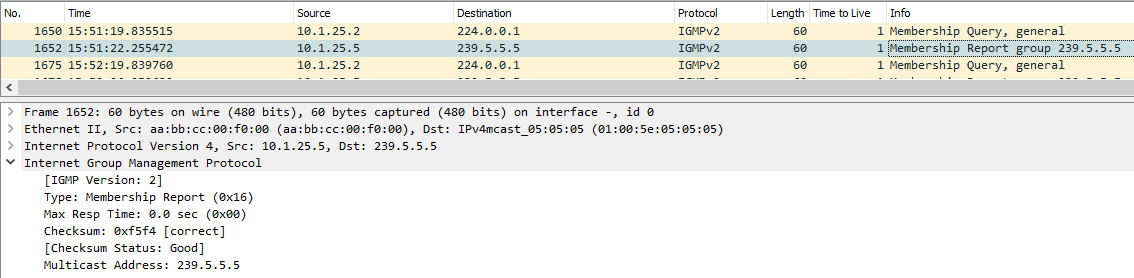

First, we will configure the host R15 to join a Multicast group: 239.5.5.5, in order to receive traffic for this Multicast group from the source:

R15(config)#int e0/0

R15(config-if)#ip igmp join-group 239.5.5.5

We can verify that R14 has received the IGMP membership report for the 239.5.5.5 group from the host R15:

As a result, R14 has installed the group on its mroute:

R14#show ip mroute

(*, 239.5.5.5), 00:07:05/00:01:58, RP 0.0.0.0, flags: DC

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/2, Forward/Dense, 00:07:05/stopped

Ethernet0/1, Forward/Dense, 00:07:05/stopped

Ethernet0/0, Forward/Dense, 00:07:05/stoppedAt this moment, we didn’t generate any multicast traffic, so, we don’t know what is the source for this group. That’s why the incoming interface is Null.

Now, let’s generate multicast traffic from the source by pinging the multicast group:

source#ping 239.5.5.5 repeat 1000

Type escape sequence to abort.

Sending 1000, 100-byte ICMP Echos to 239.5.5.5, timeout is 2 seconds:

.

Reply to request 1 from 10.1.25.5, 1 ms

Reply to request 2 from 10.1.25.5, 1 ms

Reply to request 3 from 10.1.25.5, 1 ms

Reply to request 4 from 10.1.25.5, 1 ms

Reply to request 5 from 10.1.25.5, 1 ms

Now, if we recheck the ip mroute on R14:

R14#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.5.5.5), 02:22:46/stopped, RP 0.0.0.0, flags: DC

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/2, Forward/Dense, 02:22:46/stopped

Ethernet0/1, Forward/Dense, 02:22:46/stopped

Ethernet0/0, Forward/Dense, 02:22:46/stopped

(10.1.14.4, 239.5.5.5), 00:00:19/00:02:40, flags: T

Incoming interface: Ethernet0/0, RPF nbr 10.1.12.1

Outgoing interface list:

Ethernet0/1, Prune/Dense, 00:00:19/00:02:40

Ethernet0/2, Forward/Dense, 00:00:19/stopped

R14 is receiving multicast traffic for 239.1.1.1 from R15 (10.1.14.4). As a result, R14 added a multicast route entry (S,G) as (10.1.14.4, 239.5.5.5).

Muticast traffic has been comming from interface E0/0 (which is host R15) for 19 second and if the router do not receive multicast traffic from 10.1.14.4 for the group 239.5.5.5 for 2:40 min, the route entry will be removed.

The T flags indicates that the shortest path source tree is used (from source to receivers).

The RPF neighbor (path toward the source) is 10.1.12.1 which is R12.

If the source is directly connected, we will see 0.0.0.0 as RPF neighbor, for example, for R12, the source is directly connected:

R12#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.5.5.5), 00:00:08/stopped, RP 0.0.0.0, flags: D

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/2, Forward/Dense, 00:00:08/stopped

Ethernet0/1, Forward/Dense, 00:00:08/stopped

(10.1.14.4, 239.5.5.5), 00:00:08/00:02:51, flags: T

Incoming interface: Ethernet0/0, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/1, Forward/Dense, 00:00:08/stopped

Ethernet0/2, Prune/Dense, 00:00:08/00:02:51

R12 stopped forwarding multicast traffic for this group out of interface Ethernet 0/2 (which connected to R13), because a PIM prune message was received on this interface.

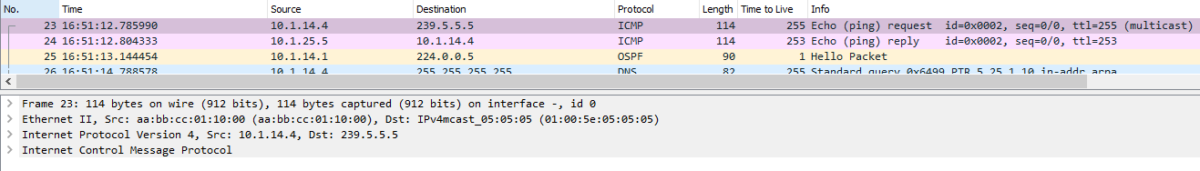

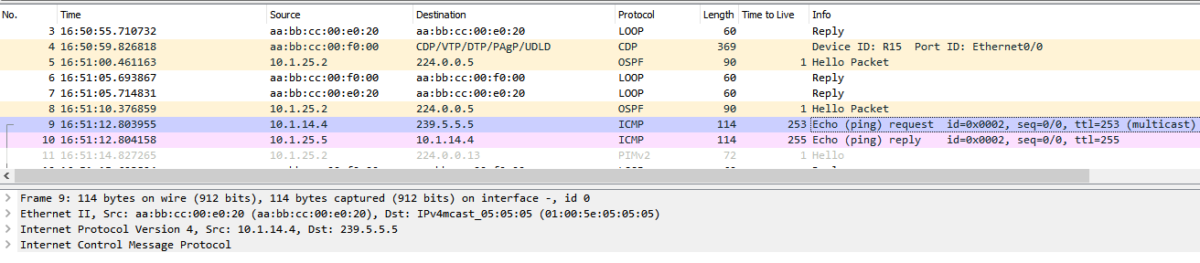

We can check the packet flow via Wireshark:

Packet flow of the multicast traffic with PIM-DM

1- the source starts generating Multicast traffic toward the multicast group 239.5.5.5.

2- On the interface e0/0 of R12, we see an ICMP packet coming from the source IP toward 239.5.5.5

3- R12 detects this multicast traffic and adds an entry (10.1.14.4, 239.5.5.5).

Initially, the traffic is flooded to all interfaces with PIM neighborship Up (mentioned in the Outgoing interface list).

4- R14 receives the multicast traffic (echo request toward 239.5.5.5) and checks the IGMP table for this group:

R14#show ip igmp groups

IGMP Connected Group Membership

Group Address Interface Uptime Expires Last Reporter Group Accounted

239.5.5.5 Ethernet0/2 03:33:34 00:02:26 10.1.25.5As a result, R14 will forward the traffic toward the receivers according to the IGMP table, in our case via interface Eth0/2 to the host R15.

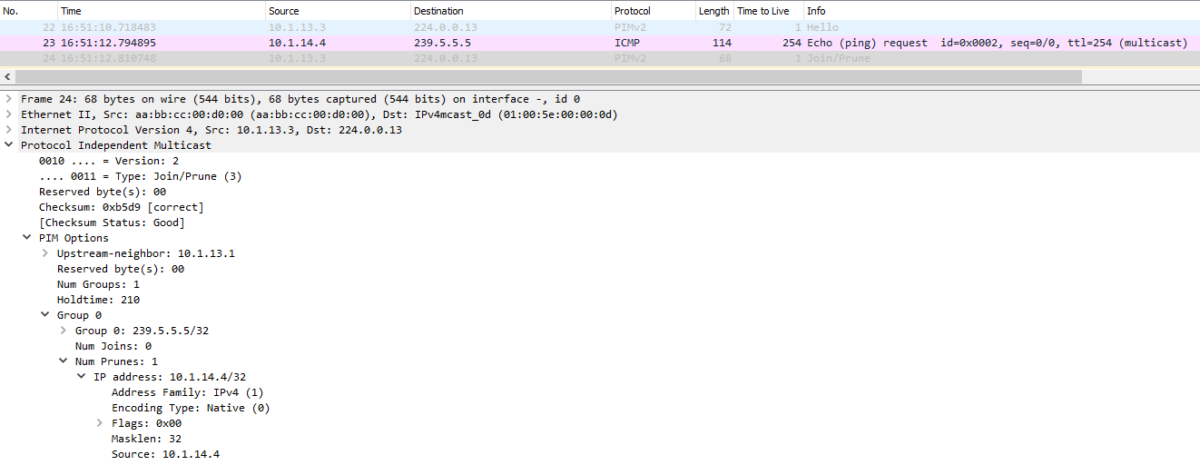

5- When R13 receives the traffic, it will also check its IGMP table and see that there is no IGMP group matching 239.5.5.5:

R13#show ip igmp groups

IGMP Connected Group Membership

Group Address Interface Uptime Expires Last Reporter Group AccountedSo, R13 will drop the multicast traffic for the group and send a PIM Prune message toward R12 telling him to stop sending traffic for this group because he has no receivers for it:

If we check R13 ip mroute table, we see that the incoming interface is e0/0 (from R12).

Also, the outgoing interface E0/1 toward R14 is pruned, an A flag is showing up indicating an assert winner (R14 and R15 both received multicast traffic from R1. If a host on subnet 10.1.23.0/24 wants to the receive multicast traffic for this group, only the Assert Winner (with Flag A, elected) will forward the traffic to the host, in order to avoid duplicate packets.

R13#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.5.5.5), 00:00:11/stopped, RP 0.0.0.0, flags: D

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/1, Forward/Dense, 00:00:11/stopped

Ethernet0/0, Forward/Dense, 00:00:11/stopped

(10.1.14.4, 239.5.5.5), 00:00:11/00:02:53, flags: PT

Incoming interface: Ethernet0/0, RPF nbr 10.1.13.1

Outgoing interface list:

Ethernet0/1, Prune/Dense, 00:00:11/00:02:50, A

PIM Graft Message

In our example, only R15 is receiving the traffic from the source for the group 239.5.5.5. As a result, interface with no receiverS are pruned.

For example, R13 which have sent a PIM Prune message toward R12 to stop sending traffic for this group, can at any moment have a host that want to receive this traffic (R16). but, the problem is that when R12 don’t receive any Prune message from R13 for 3 Minutes, it will start fowarding again to R13.

To avoid this ineffeciency of traffic forwarding, a PIM message called PIM Graft is sent from the PIM router which have new receivers to the upstream PIM neighbors asking them to Uprune the interface towarding them Immediately.

Let’s test this by adding the host R16 as a receiver for the multicast group 239.5.5.5:

R16(config-if)#int e0/0

R16(config-if)#ip igmp join-group 239.5.5.5

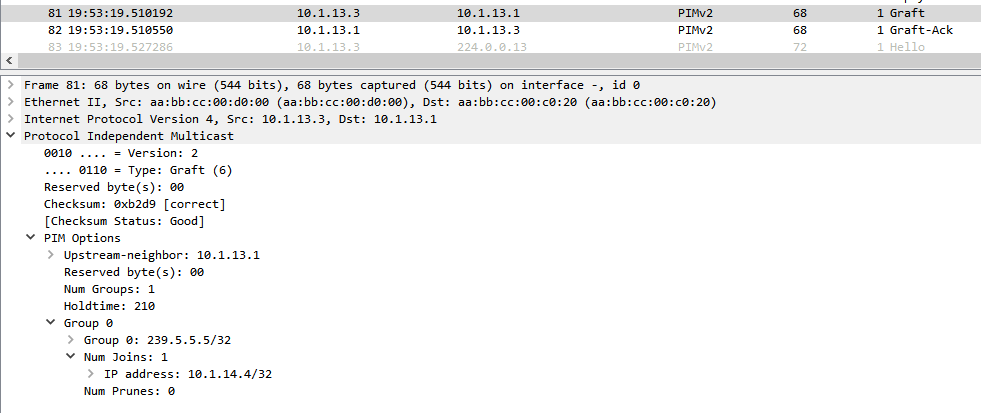

On Wireshark, we can see the PIM Graft message sent from R13 to R12:

On R12, we can see that e0/2 toward R13 was unpruned and turn into Forward state:

R12#show ip mroute

(*, 239.5.5.5), 00:22:23/stopped, RP 0.0.0.0, flags: D

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/2, Forward/Dense, 00:22:23/stopped

Ethernet0/1, Forward/Dense, 00:22:23/stopped

(10.1.14.4, 239.5.5.5), 00:22:23/00:02:39, flags: T

Incoming interface: Ethernet0/0, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/1, Forward/Dense, 00:22:23/stopped

Ethernet0/2, Forward/Dense, 00:19:23/stopped

![Explore The BGP Path Selection Attributes [Explained with Labs]](https://learnduty.com/wp-content/uploads/2022/07/image-28-800x450.png)