ACI Multi-Site Overview and Basics

Reference Note: This post is heavily based on the ACI MultiSite latest White paper.

Contents

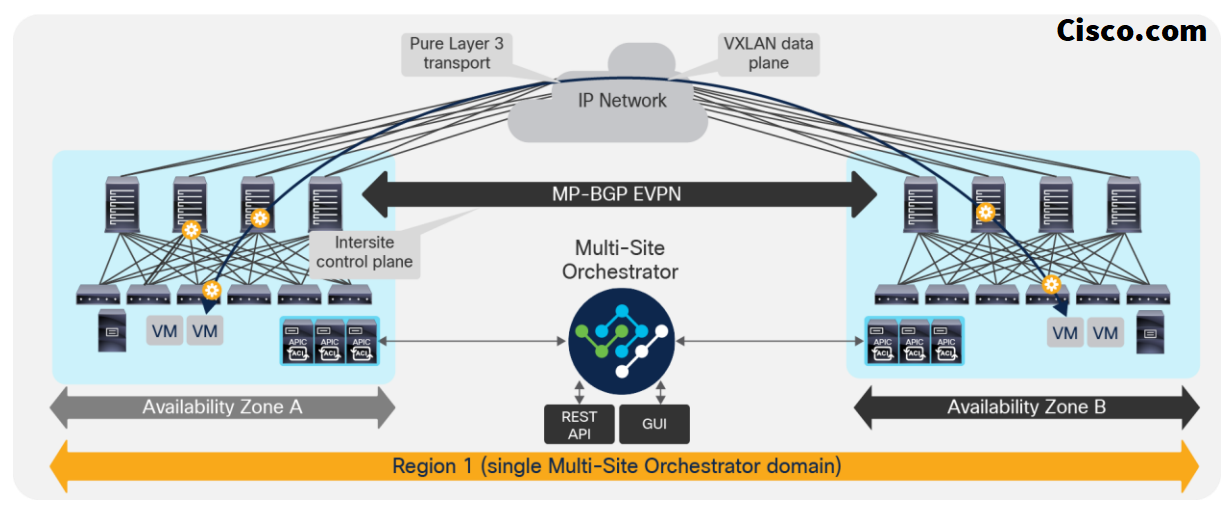

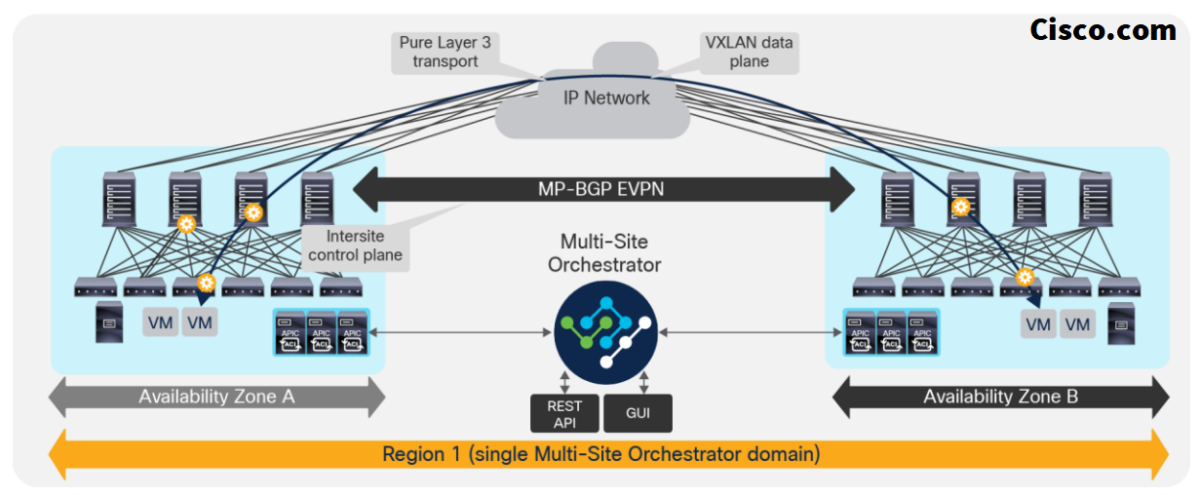

ACI Multi-Site Design and Architecture

This design is achieved by using the following functional components:

- Cisco Multi-Site Orchestrator (MSO): This component is the intersite policy manager. It provides single-pane management, enabling you to monitor the health-score state for all the interconnected sites. It also allows you to define, in a centralized place.

- Intersite control plane: Endpoint reachability information is exchanged across sites using the MP-BGP EVPN control plane. This approach allows the exchange of MAC and IP address information for the endpoints that communicate across sites. MP-BGP EVPN sessions are established between the spine nodes deployed in separate fabrics that are managed by the same instance of Cisco Multi-Site Orchestrator.

- Intersite data plane: All communication (Layer 2 or Layer 3) between endpoints connected to different sites is achieved by establishing site-to-site Virtual Extensible LAN (VXLAN) tunnels across a generic IP network that interconnects the various sites.

ACI Multi-Site Requirements

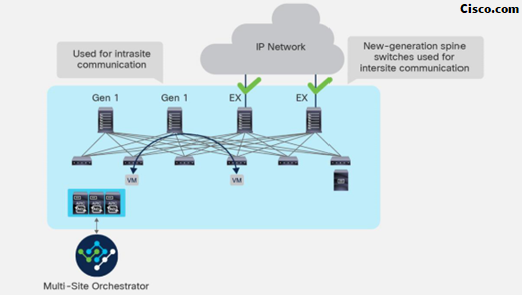

- Hardware requirement:

Only the Cisco Nexus® EX platform (and newer) generation of spine switches is supported in a Multi-Site deployment. Note that first-generation spine switches can coexist with the new spine-switch models, as long as the latter are the only ones connected to the external IP network and used for intersite communication.

- Latency:

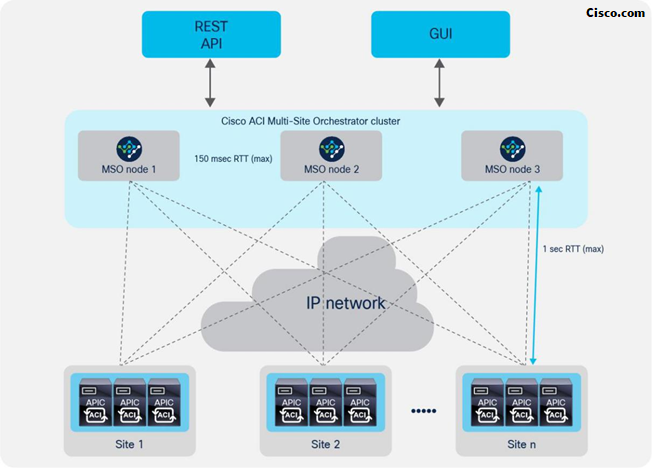

The supported Round-Trip Time (RTT) latency between Multi-Site Orchestrator nodes in the cluster is up to 150 milliseconds (ms), which means that the virtual machines can be geographically dispersed across separate physical locations if required. The Multi-Site Orchestrator cluster communicates with each site’s APIC cluster over a secure TCP connection, and all API calls are asynchronous. The current maximum supported RTT distance is up to 1 second between the Multi-Site cluster and each specific site’s APIC cluster.

- ISN OSPF support:

The ISN also must support Open Shortest Path First (OSPF), to be able to establish peering with the spine nodes deployed in each site.

- Increased MTU:

The ISN also requires MTU support above the default 1500-byte value. VXLAN data-plane traffic adds 50 bytes of overhead (54 bytes if the IEEE 802.1q header of the original frame is preserved), so you must be sure that all the Layer-3 interfaces in the ISN infrastructure can support that increase in MTU size.

The recommendation is to support at least 100 bytes above the minimum MTU required:

- If the endpoints are configured to support jumbo frames (9000 bytes), then the ISN should be configured with at least a 9100-byte MTU value.

- If the endpoints are instead configured with the default 1500-byte value, then the ISN MTU size can be reduced to 1600 bytes.

Please check some ACI Multi-Site Initial Setup Considerations in the post below:

Name-space normalization (shadow EPGs)

The VXLAN Network Identifier (VNID) identifies:

- The Bridge Domain (BD) (for Layer 2 communication)

- The Virtual Routing and Forwarding (VRF) instance (for Layer 3 traffic) of the endpoint sourcing the traffic (for the Intra-VRF communication).

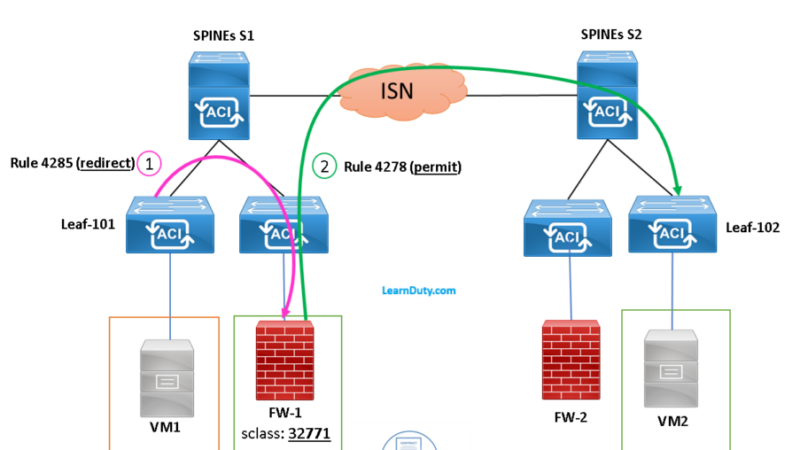

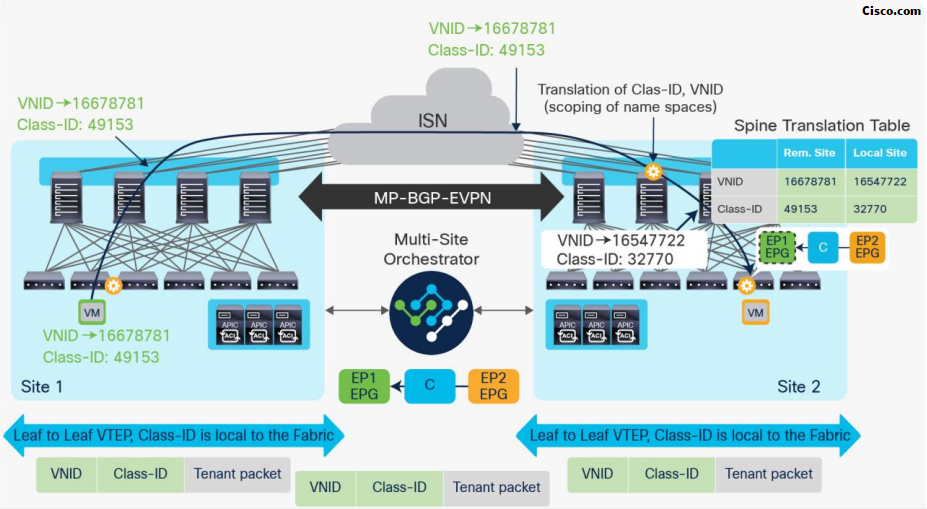

The class ID is the unique identifier of the source Endpoint Group (EPG). However, these values are locally significant within a fabric. Because a completely separate and independent APIC domain and fabric are deployed at the destination site, a translation function (also referred to as “name-space normalization”) must be applied before the traffic is forwarded inside the receiving site, to help ensure that locally significant values identifying that same source EPG, bridge domain, and VRF instance are used.

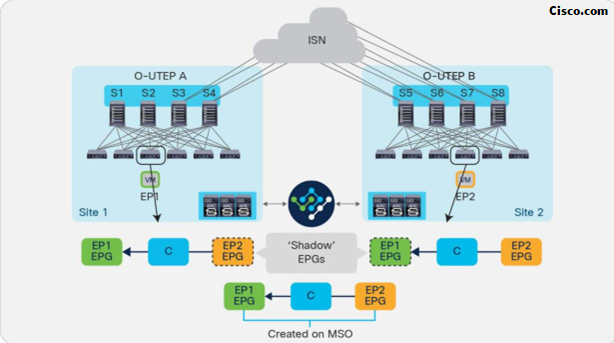

When the desired configuration is defined on Cisco Multi-Site Orchestrator and then pushed to the different APIC domains, specific copies of EPGs, named “shadow EPGs,” are automatically created in each APIC domain.

This ensures that the whole configuration centrally defined on MSO can be locally instantiated in each site and the security policy properly enforced, even when each EPG is only locally defined and not stretched across sites (specific VNIDs and class IDs are assigned to the shadow objects in each APIC domain).

In the example above, the yellow EP2 EPG (and its associated BD) is created as a “shadow” EPG in APIC domain 1, whereas the green EP1 EPG (and its associated BD) is a “shadow” EPG in APIC domain 2. Up to Cisco ACI Release 5.0(1), the shadow EPGs and BDs are not easily distinguishable in the APIC GUI, so it is quite important to be aware of their existence and role.

Since both APIC domains are completely independent of each other, we expect that different VNID and class-ID values would be assigned to a given EPG (the “real” and the “shadow” copy) across sites. This implies that a translation of those values is required on the spines receiving data-plane traffic from a remote site before injecting the traffic into the local site.

Each EPG in the local site has a different Class-ID from its shadow in the Remote site. VNID as well are local to each Fabric. The role of the Spines is the make the translation between the Local (real EPG class-ID) and the Remote (Shadow EPG Class-ID) and for VNID also.

- Intra-EPG: The translation table entries on the spine nodes are always populated when an EPG is stretched across sites, this is required to allow intra-EPG communication that is permitted by default.

- Inter-EPG: For Inter-EPG communication between sites, a contract between the EPGs is required to trigger the proper population of those translation tables

Nexus Dashboard Orchestrator

Cisco Multi-Site Orchestrator (MSO) / Nexus Dashboard Orchestrator (NDO) is responsible for provisioning, health monitoring, and managing the full lifecycle of Cisco ACI networking policies and tenant policies across Cisco ACI sites.

Its key functions are:

- Create and manage Cisco Multi-Site Orchestrator users and administrators through RBAC rules.

- Add, delete, and modify Cisco ACI sites.

- Use the health dashboard to monitor the health, faults, and logs of intersite policies for all the Cisco ACI fabrics that are part of the Cisco Multi-Site domain.

MSO Schemas and Templates

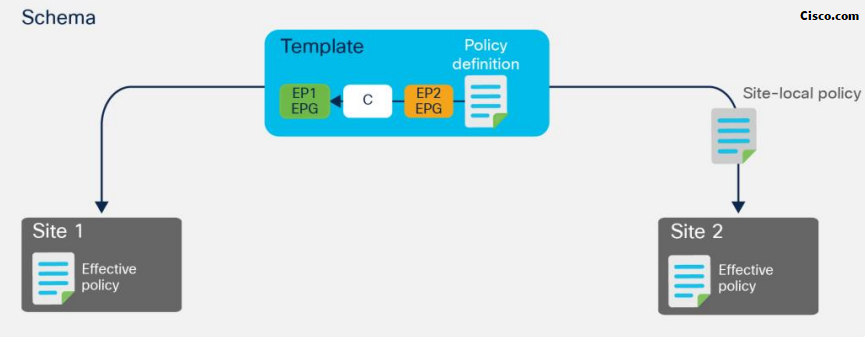

- MSO Templates: The creation of tenant-specific policies (EPGs, BDs, VRFs, contracts, etc.) is always done on MSO inside a given template, as each template is always associated with one (and only one) tenant.

- MSO Schemas: Multiple templates can then be grouped together inside a schema, which essentially represents a container of templates.

When you define intersite policies, Cisco Multi-Site Orchestrator also properly programs the required name-space translation rules on the Multi-Site-capable spine switches across sites.

Cisco ACI Multi-Site License

Cisco ACI Multi-Site requires the purchase of the following:

- ACI spine-leaf architecture with an APIC cluster for each fabric

- License for the Cisco Multi-Site virtual appliance: ACI-MSITE-VAPPL= (This single SKU is sold as a cluster of three Virtual Machines [VMs].)

- One ACI Advantage license per leaf for all of the connected fabrics

| Product SKU | Product description | Number of virtual appliances |

| ACI-MSITE-VAPPL= | Cisco ACI Multi-Site virtual appliance | 3 |

The advantage license is only for Leaf switches, No advantage license option for spine switches.